One of the most requested activities in FET Science (Physical, Technical and Engineering) is the internal resistance of a battery.

Now, there are several hundred free experiments available for download here at the PASCO resource centre:

https://www.pasco.com/resources/lab-experiments

Unfortunately, not one of these is on internal resistance. “I will just have to develop one myself,” I thought. But then I reflected, “Ah, but in this age of AI, do you really have to do anything, yourself?” Yes, you do. Let me show you why.

Here’s the first part of the transcript:

Please write an experiment to learn about the internal resistance of a battery.

ChatGPT

Certainly! Measuring the internal resistance of a battery is a valuable experiment to understand its performance. Here's a simple experiment to measure the internal resistance of a battery:

Title: Measuring the internal resistance of a battery

Objective: To determine the internal resistance of a given battery.

Materials:

- Battery to be tested

- Variable resistor (potentiometer)

- Voltmeter

- Ammeter

- Connecting wires

- Switch

- Resistors of known values

- Stopwatch or timer

- Safety goggles

I suppose ChatGPT thinks you always need a stopwatch and googles to do a science lab, but neither is necessary, nor indeed referenced in the detailed instructions that follow. Otherwise, the lab is clear, consistent, and practical, with step-by-step notes for dialling up resistance, while recording current and voltage values for the cell.

But when I asked for the teacher’s version, here is what I got:

ChatGPT

Certainly! Here's a sample data table and some teacher notes for the Battery Internal Resistance lab:

Sample data table:

| Resistance (ohms) | Voltage (V) | Current (A) | Internal Resistance (ohms) |

|--------------------|-------------|--------------|-----------------------------|

| 5 | 4.8 | 0.96 | 5.00 |

| 10 | 4.7 | 0.47 | 10.00 |

| 15 | 4.5 | 0.30 | 15.00 |

| 20 | 4.3 | 0.21 | 20.48 |

| 25 | 4.1 | 0.16 | 25.63 || |

Ok, the table looks quite respectable, and you would be inclined to believe it, especially because of its low grade, old school, ‘machine code’ look and feel.

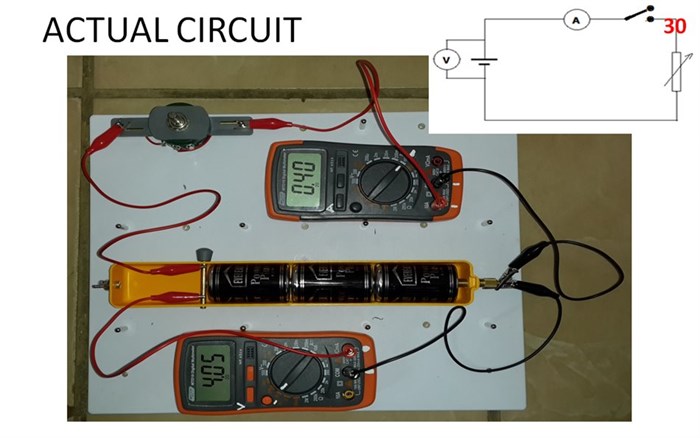

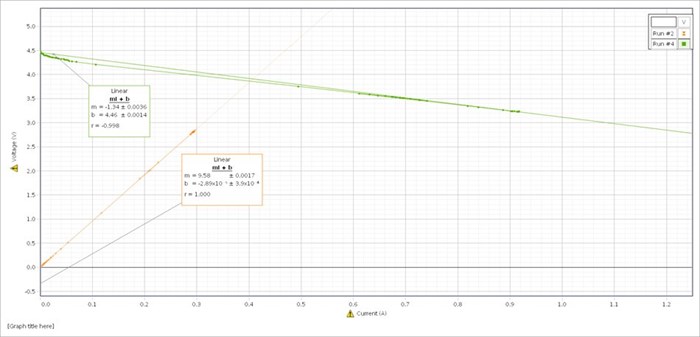

But here’s what happened when I physically did the work. I performed the complete experiment using PASCO sensors and software:

As ChatGPT correctly notes, the slope of this graph represents internal resistance. This m value is -1,34 ie.1,3 ohm, about what you would expect from a gently used AA alkaline. I have no idea how the AI came up its own “sample data” nor what it could possibly mean. It certainly bears no relation to its own set of instructions, nor to any real-world results for that matter. It is in clear contradiction to itself and this, I think, points to a much deeper problem with AI than the disclaimer “ChatGPT can get things wrong”, or as some users put it: “ChatGPT makes things up”. The fact that it can detail a set of instructions and then cheerfully (and very politely) generate a corresponding data set of nonsense is concerning, not so much because of the error itself, but its sheer obliviousness. You could even say that ChatGPT is blithely idiotic, apparently capable of serious and complex work, while grappling with real human language, yet unable to properly handle its own output, and the implications of its communication. To put it in a nutshell: artificial intelligence is not very intelligent.

This is how Noam Chomsky and others described it in a New York Times op-ed last year: “such programs are stuck in in a prehuman or nonhuman phase of cognitive evolution. Their deepest flaw is the absence of the most critical capacity of any intelligence: to say not only what is the case and what will be the case – that’s description and prediction- but also what is not the case and what could and could not be the case. Those are the ingredients of explanation, the marks of true intelligence.” (my italics)

To me the problem is not so much that Chat GPT made a mistake. Humans do that all the time, myself especially. The problem is how the software generated a table that was at odds with reality and also with its own textual instructions. If humans did that in a job interview, we would be inclined to say: “They have no idea what they are talking about.”

ChatGPT has no idea what it is talking about. That much is clear to me, now. But this is not to discard its usefulness. PERT has been operating since 1967, from written quotes, to typewriters with carbon paper, then photocopies and faxes, word processors and spread-sheets, the internet, and now AI. All super useful, of course. But in the end, you still must do the work yourself, which is, perhaps, not such a bad thing, after all.